Table of Contents

ToggleThe world of seo has suffered a major shake-up after Google removed the 100 results per page parameter on their results pages. This change, applied without any official announcement or prior notice, has altered the functioning of multiple tools seo like Ahrefs, Semrush or Kurranker, and has also affected the metrics within Google Search Console.

In this article, from Daimatics, we analyze this change in depth, from its causes to the practical consequences for digital marketing and web positioning professionals.

What parameter did Google remove and why was it so important?

The parameter in question is &num=100This was added to the URL of Google search results to load up to 100 results per page, instead of the usual 10. Thanks to this setting, tools seo like Ahrefs or Semrush they could collect information about the Top 100 results with just a single upload per keyword.

With its elimination, these tools must make 10 times more queries to obtain the same information, which means an increase in resources, costs and analysis time.

“This change forces the tools seo to completely rethink the way they crawl and process SERP data. It's not just a technical issue: it changes the accessibility of information.” — Consulting Team seo by Daimatics

But beyond the tool sector, the impact has also reached professionals in the seo and website owners, especially in the way data is interpreted within Search Console.

Direct impact on Google Search Console: altered impressions and average position

After the change, two main and recurring behaviors have been detected in Search Console performance graphs:

- A noticeable drop in impressions, even on websites with stable traffic

- A sudden increase in average position

To understand this, you need to know how an "impression" is counted in Search Console. Until now, if your page appeared on any of the top 10 pages (positions 1-100), even if it was in position 95, it was counted as an impression thanks to the removed parameter.

Now, without this parameter, only those URLs that are actually displayed in the visible results by default (Top 10 to Top 20, depending on user behavior) are counted as impressions.

“It's not that your website has lost real traffic, but that Google now only counts what is actually visible to human users, not bots.” — Analyst seo by Daimatics

Comparison table: before and after the change

| appearance | Before the change | After the change |

|---|---|---|

| Visible results per page | Up to 100 (with parameter) | Only 10 per page |

| Recorded impressions | Up to position 100 | Only real positions visible |

| Average position | Minor (inflated data effect) | Greater (more realistic) |

| Access to SERP data | Easy and economical for tools | Expensive and limited |

Tool reaction seo: how Ahrefs adapts, Semrush and Kurranker

Ahrefs

CEO Tim Soulo has asked the community seo whether it is really relevant to continue knowing the 30, 60, 90 positions. He argues that perhaps these positions do not provide real value and only waste resources.

However, many experts seo —including our team— partially disagree. In new projects or in stages of scaling, it is essential to see where your website fits into the semantic universe of a keyword, even if it is in position 70.

Semrush

Their response has been more ambiguous. They have assured that the Top 10 will continue to be displayed constantly and that the Top 20 will be updated less frequently (up to 48 hours). Regarding the subsequent positions, they have not specified their technical strategy.

Kurranker

This tool has been very straightforward: for now, it will only offer data up to the Top 20. They are working on a new solution that will allow re-tracking up to the Top 100 in a sustainable way.

Did Google do this specifically to block scrapers and AI?

There has been no official statement from Google, but everything indicates that it is. This decision is part of a strategic move to reduce:

- The massive use of bots and scrapers to monitor positions seo

- Data extraction by artificial intelligence systems (such as ChatGPT, Perplexity or Gemini)

In fact, at Guidelines againstspam from Google, it is established that crawling search results with bots is explicitly prohibited and may interfere with their machine learning.

Quality Raters and AI Overview: New Era for the seo

Google has updated its Quality Rater guidelines to include the evaluation of AI-generated responses within AI Overview. These raters must now determine whether the response:

- It is correct and objective.

- It is relevant to search intent

- It is complete enough.

A specific example: if the AI answers a question with scattered information and without clear data, this will be considered an incorrect answer.

Will AI Mode be the default search? Google contradicts itself

Logan Kilpatrick, product manager at AI Studio, told X that AI Mode will be the default search system soon. However, Roby Stein (product manager at Google Search) denied this or, at least, downplayed his colleague's statements.

According to data from SimilarWeb:

- Only 31% of users in the US use AI Mode

- The 3.5% is made in the UK

Therefore, the use of AI-powered search is still anecdotal, but its evolution will need to be followed.

Advertising on the open web is in decline: Google acknowledges it

In a judicial environment, Google has acknowledged that the Display advertising is in decline on the open web. This statement clashes with the positive discourse maintained so far by its managers, including Sundar Pichai.

“The open web is weakening. The high concentration of traffic and competition from other platforms have affected the classic advertising model.” — Google (antitrust statement)

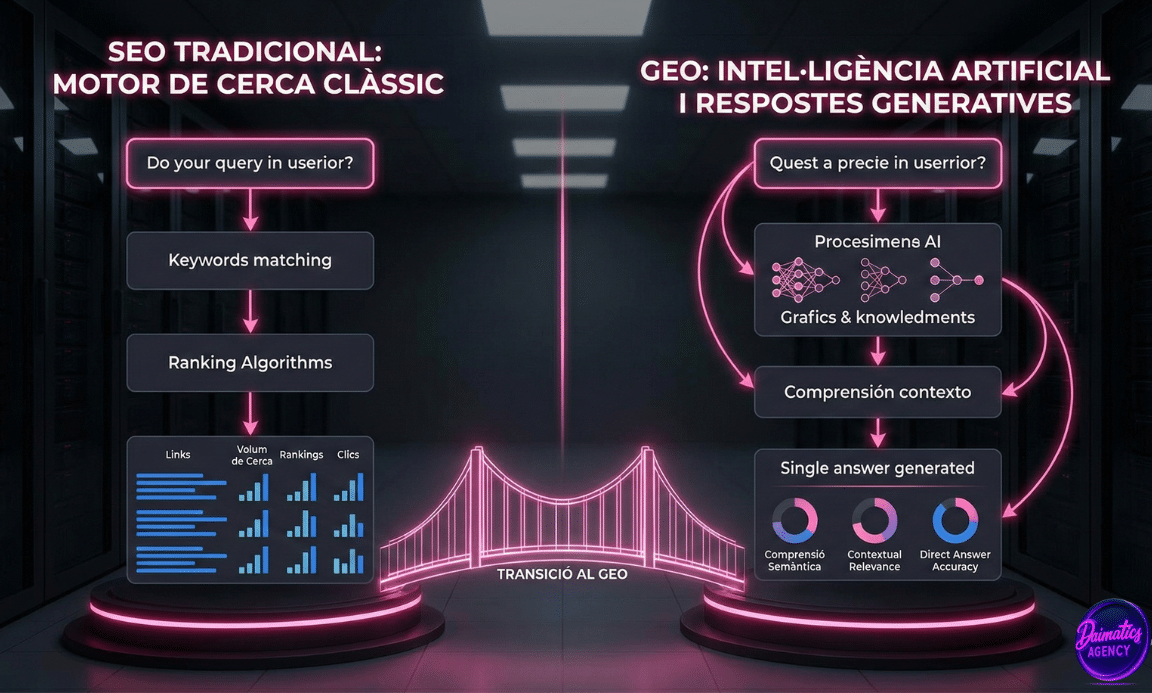

GEO, LLM Optimization, AI seo...what do we say now?

A survey by Search Engine Journal shows that the community seo is divided when it comes to naming this new discipline:

- 36%: AI Search Optimization

- 27%: seo for AI

- 18%: GEO, LLM Optimization and other new terms

At Daimatics we continue to bet on a classic vision of the seo adapted to the new rules of the game. With structured data, digital reputation and a focus on quality.

Conclusions: a new stage for the seo

The disappearance of the 100 results parameter is more than a technical detail. It is a change of era in access to information seoTools will have to be reinvented, SEOs will have to fine-tune their strategies, and Google is increasingly closing its ecosystem.

But it's not all problems. Data will be more precise, strategies more focused, and the focus increasingly oriented towards real quality, not inflated volumes.

If you want to adapt your strategy to this new scenario seo, contact with Daimatics and we will help you guide your project with real data and effective strategy.

❓ FAQ about changing Google setting

It is a URL parameter that allowed 100 results per page to be displayed in the SERPs. Google has removed it to make mass scraping more difficult.

Because now only real impressions visible in the top 10 count, not up to position 100 like before.

No. It affects how data is measured and viewed, but not the actual position in Google results.

Ahrefs, Semrush, Kurranker and other mass crawling tools that depended on this parameter.

Optimize for quality, structure your content, improve citations, and focus on relevance in AI and structured data.